Motivation & Method

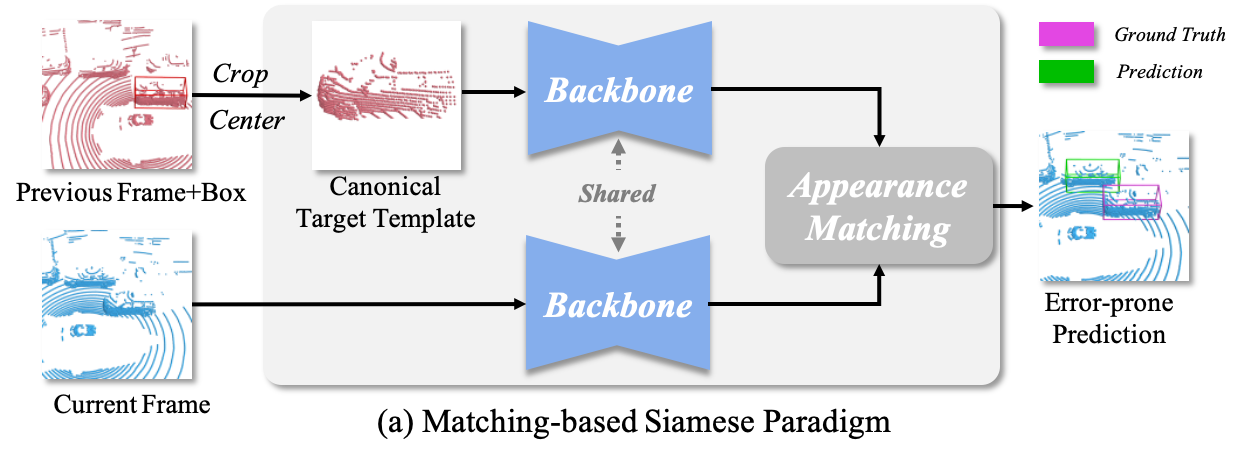

For single object tracking in LiDAR scenes (LiDAR SOT), previous methods rely on appearance matching to localize the target using a target template.

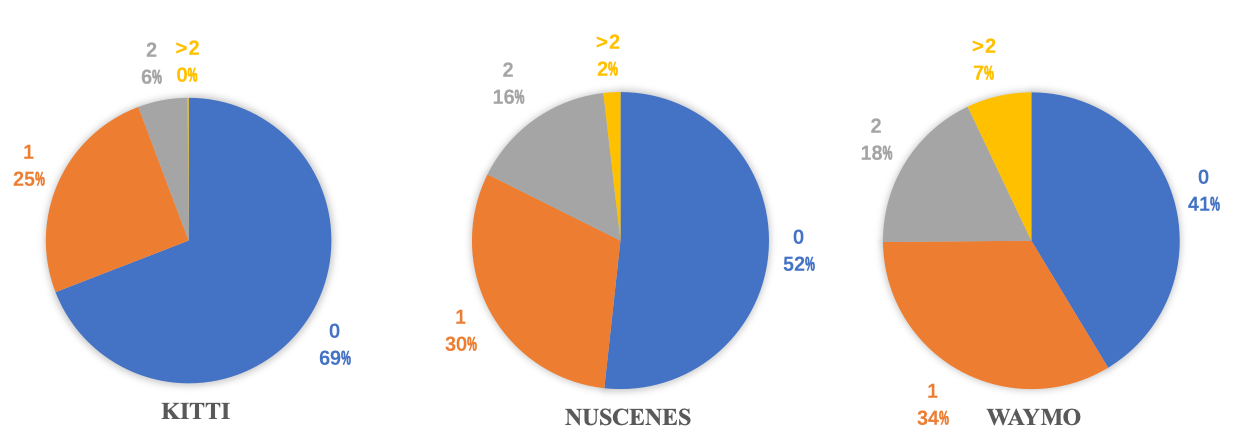

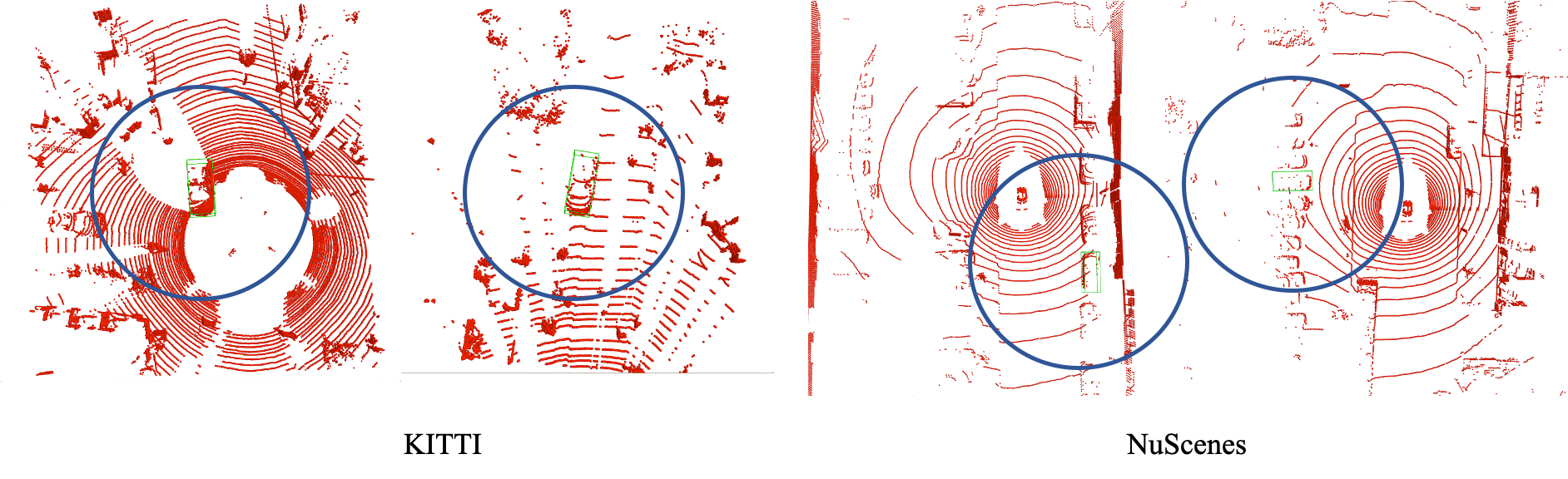

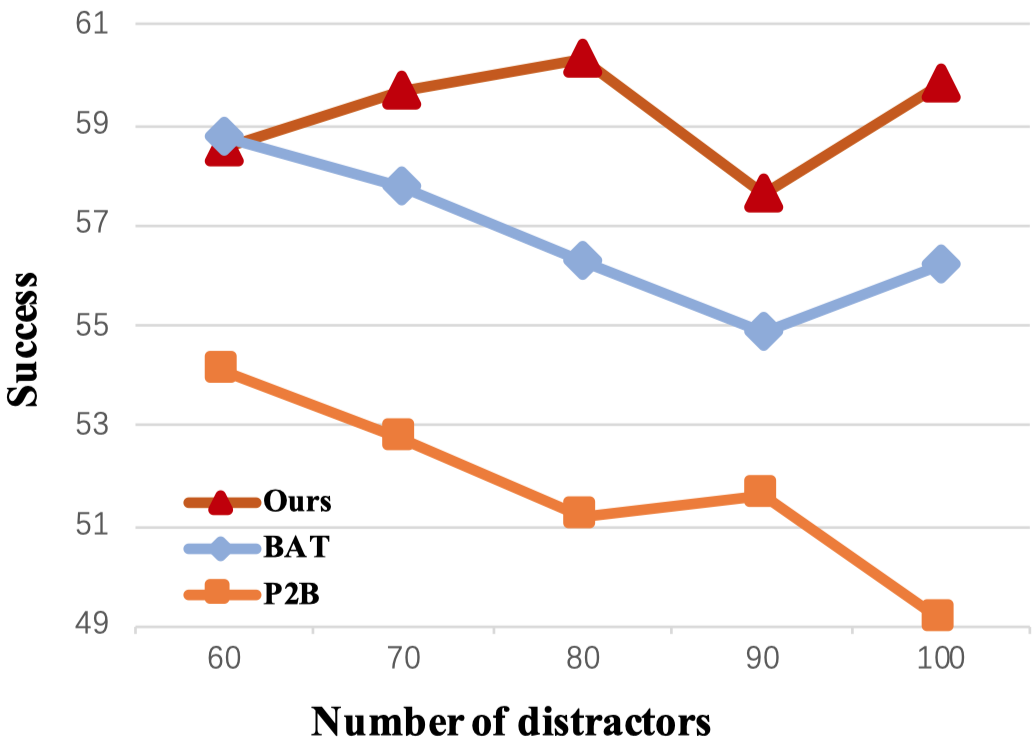

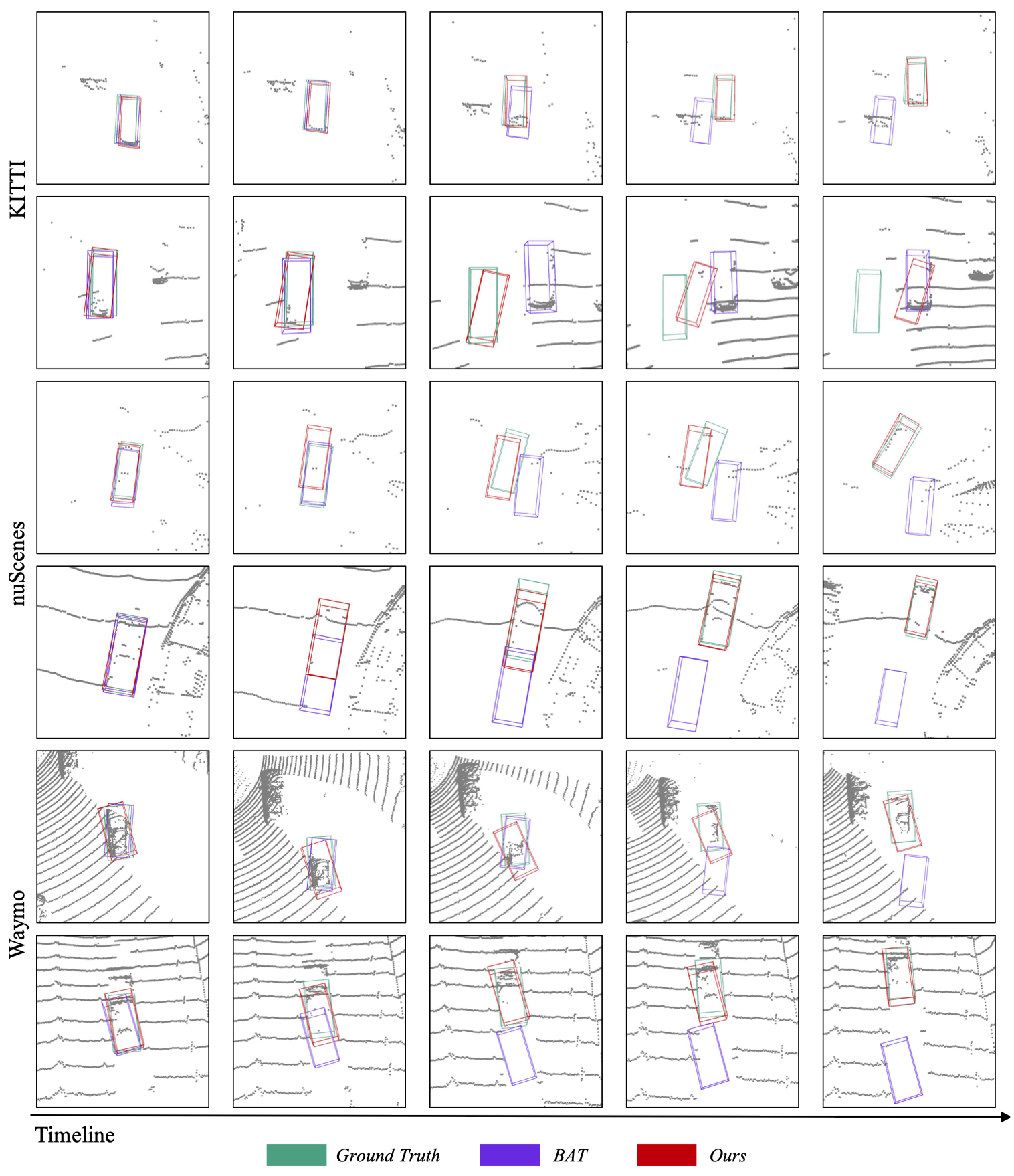

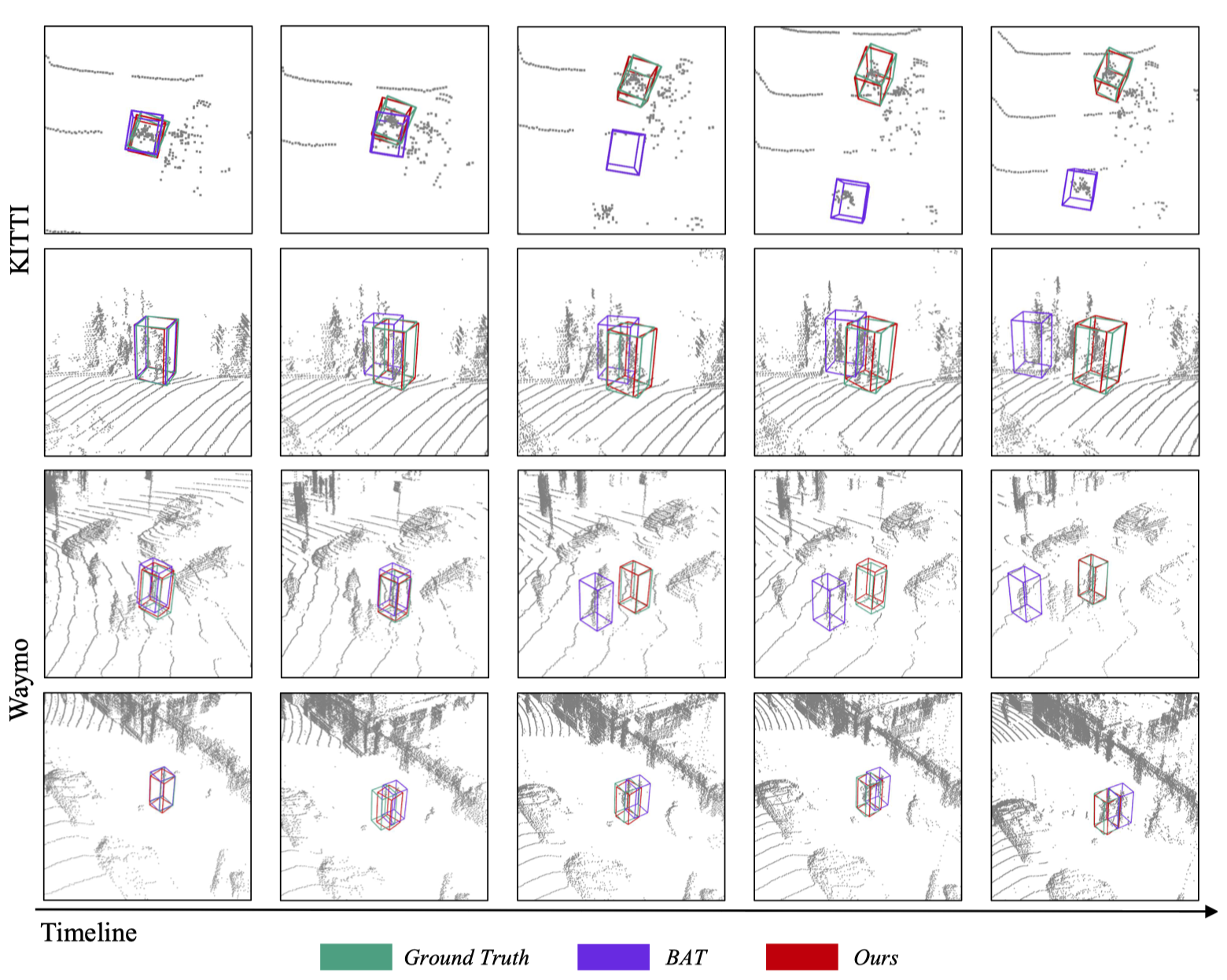

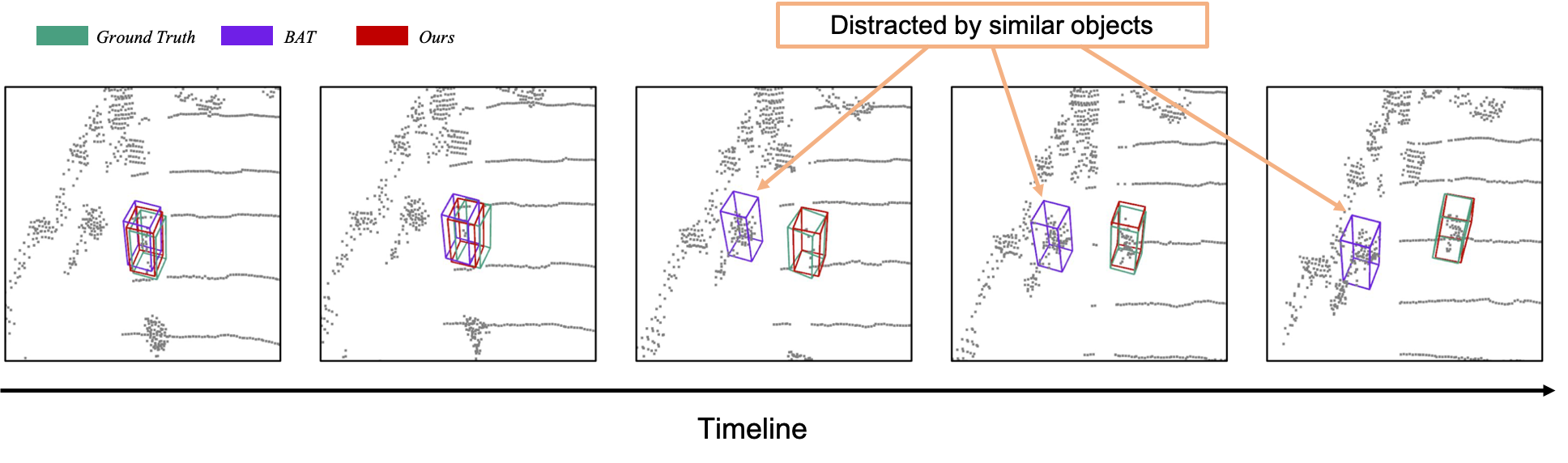

However, as shown in the following figure, matching-based approaches become unreliable when dealing with drastic appearance changes and distractors, which commonly exist in LiDAR scenes.

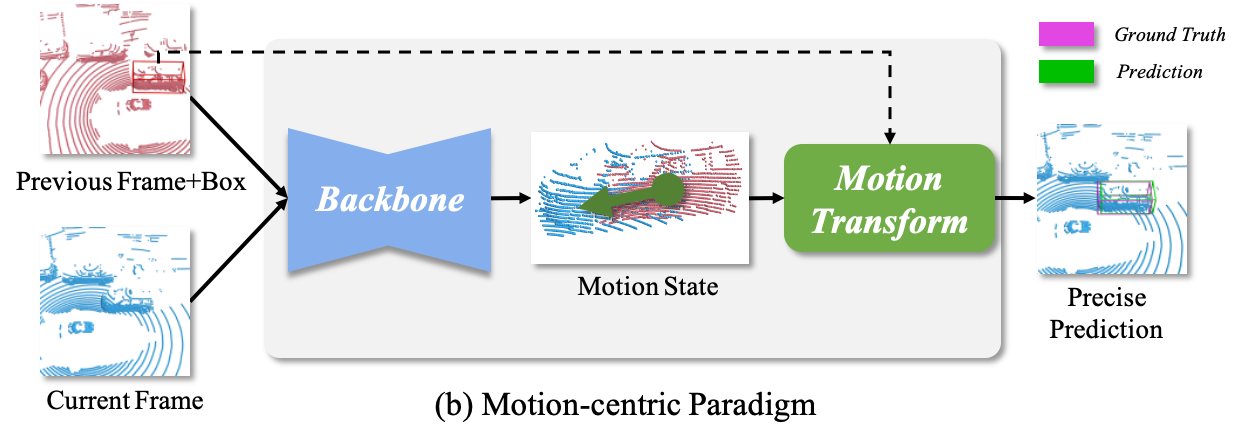

Since the task deals with a dynamic scene across a video sequence, the target's movements among successive frames provide useful cues to distinguish distractors and handle appearance changes. We for the first time present a motion-centric paradigm to handle LiDAR SOT. By explicitly learning from various "relative target motions" in data, the paradigm robustly localize the target in the current frame via motion transformation.

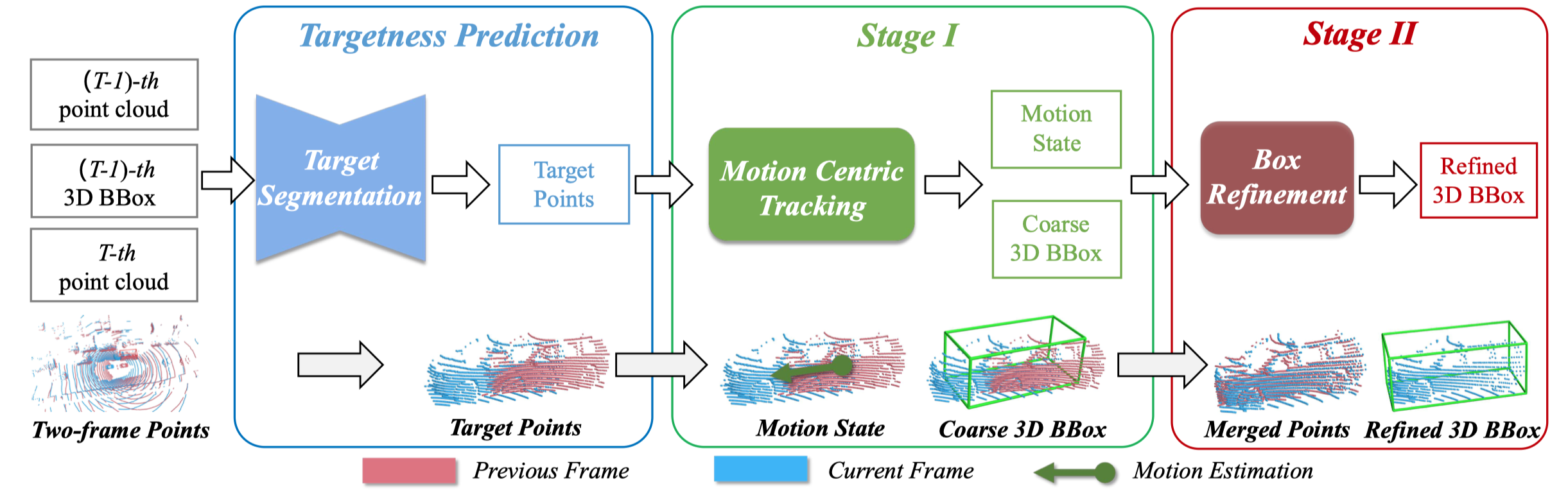

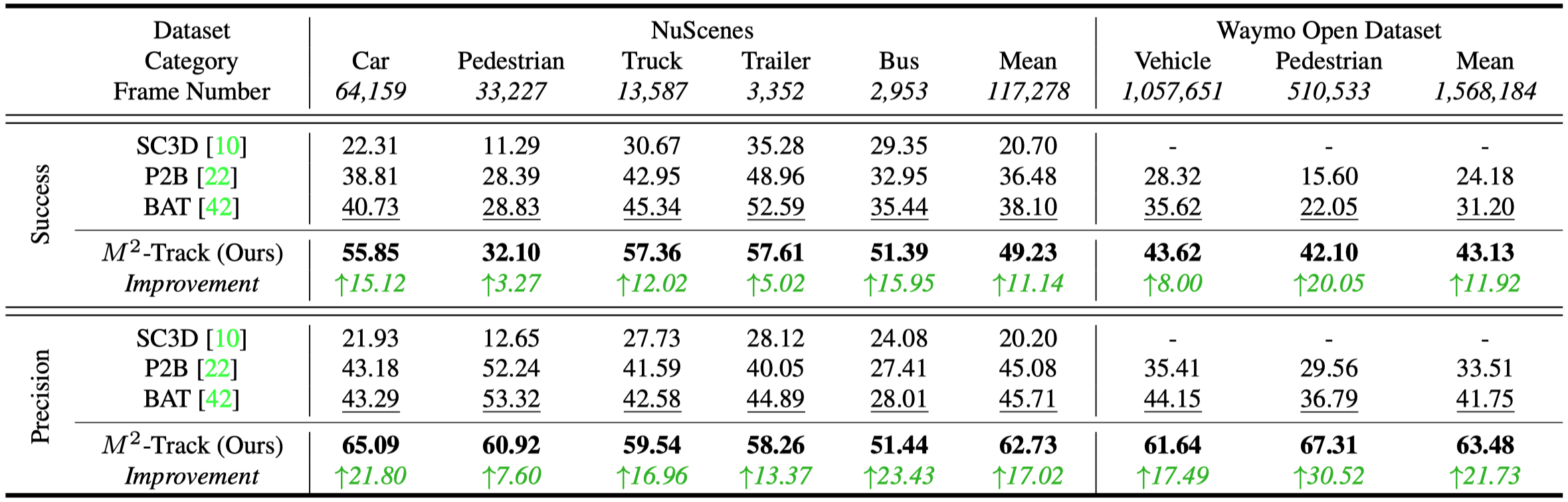

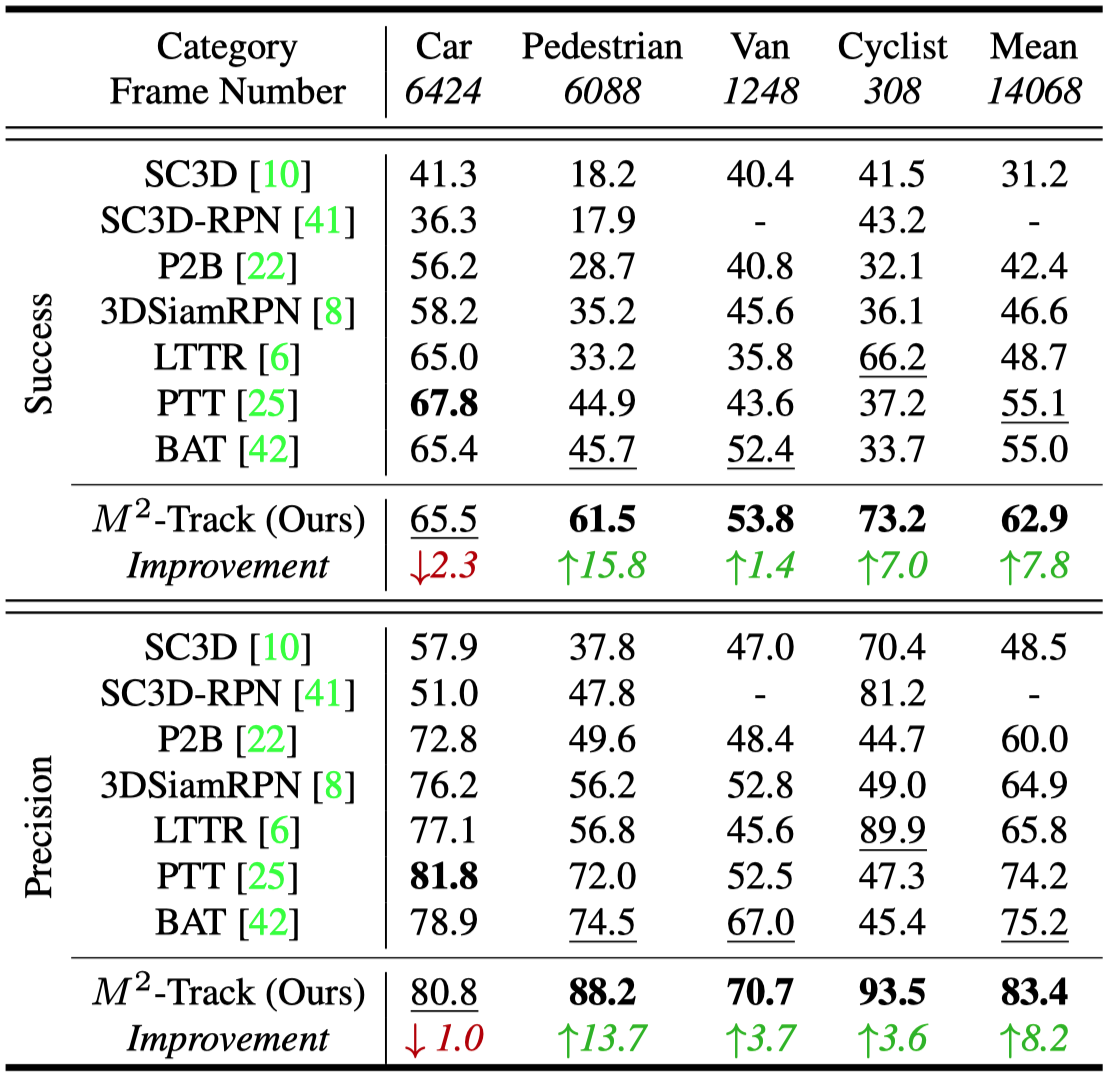

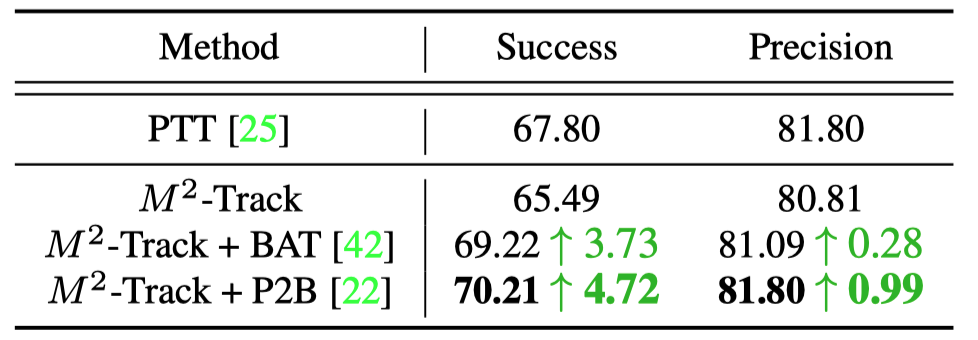

Based on the motion-centric paradigm, a two-stage tracker M^2-Track is proposed. At 1 st-stage, M^2-Track localizes the target within successive frames via motion transformation. Then it refines the target box through motion-assisted shape completion at 2nd-stage. M^2-Track significantly outperforms the previous SOTAs and further shows its potential when simply integrated with appearance-matching.